From Co-Analyst to Autonomous Intelligence: Our Vision for Healthcare AI

by Yubin Park, Co-Founder / CTO

From Co-Analyst to Autonomous Intelligence: Our Vision for Healthcare AI

We've lived and breathed healthcare data analytics for years. And here's what people outside the industry don't see: healthcare operations is extraordinarily complex and dynamic.

New payment models from CMS. New data requirements from regulators. New competitive strategies from health plans. Every day is different. Every day demands new insights.

And the data team? They're always on fire drill. Fighting different issues. Spending countless hours in PowerBI and Tableau to deliver insights that often don't last long because the market shifted again. Then you do it all over.

When AI first came out, we saw the opportunity. Maybe things could be a lot more dynamic in nature. Maybe the intelligence layer could move at the speed the business actually moves—not the speed of dashboard refresh cycles.

The answer wasn't making better dashboards. The answer wasn't making data pipelines faster.

The answer was: how do we create a super analyst who understands healthcare and delivers the insights we need, when we need them?

Last week, Anthropic unveiled Claude Cowork—AI that lets non-technical people do knowledge work without writing code. And something clicked. This was the parallel to what we've been building. The analogy finally made it easier to explain.

The Gap in the Market

Here's what's available today:

General AI platforms like ChatGPT and Claude are incredibly powerful. They can write code, analyze data, generate insights. But ask them about Medicare Advantage risk adjustment reconciliation or RADV audit preparation, and you're starting from zero every single time. They have no memory of CMS payment timelines, no understanding of how provider networks actually work, no context about what "good" looks like in fraud detection.

Traditional healthcare analytics vendors like enterprise BI platforms have deep domain expertise. They know Medicare inside and out. But they're enterprise software from a different era: implementation timelines measured in quarters, rigid dashboards that require IT to modify, and workflows designed for how things worked five years ago, not how lean teams operate today.

Clinical AI tools from companies like Anthropic and OpenAI are moving aggressively into healthcare, but they're focused on prior authorization, clinical documentation, and patient-facing workflows. That's important work, but it's not payer operations. It's not fraud detection. It's not the analytics layer that keeps health plans running.

The white space: Conversational AI with deep healthcare operations expertise, built for how modern teams actually work.

That's what we're building.

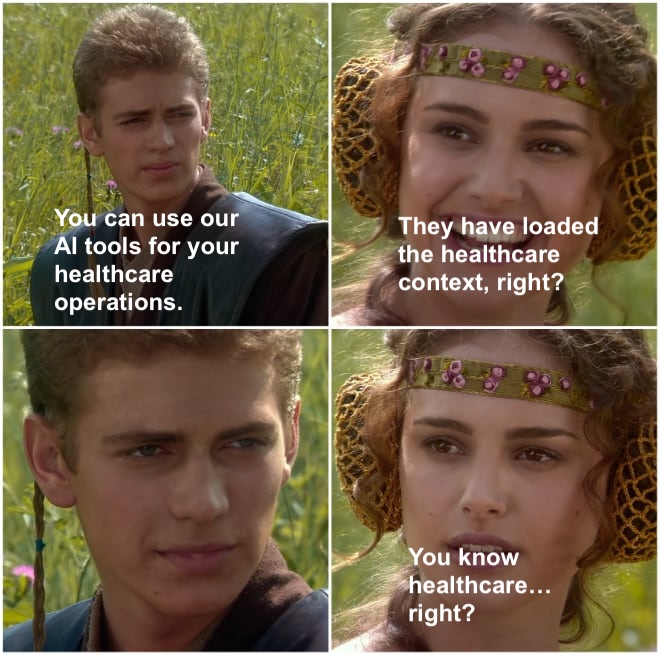

The moment of realization: Generic AI tools don't come with healthcare expertise pre-loaded. Someone has to build that in.

What We're Learning: The Gap Between Domain and Engineering

Here's the fundamental problem in healthcare analytics: you almost never get both deep domain expertise and engineering expertise in the same person.

Domain experts understand Medicare policies, fraud patterns, and network dynamics—but they don't write code.

Data engineers can build pipelines and dashboards—but they don't understand why a certain HCC code pattern matters or what "good" looks like in RADV prep.

The gap between them makes operations incredibly inefficient. Communication always lacks something. Deliverables are usually not quite right. Many iterations. Lost time.

What healthcare needs is data engineers with deep domain expertise—which is exactly what we are. That's how we started: putting our knowledge directly into the system.

The goal: domain experts ask questions directly and get answers. No tickets. No coordination overhead. No waiting.

"Show me high-risk providers in our network." "Are there billing anomalies in cardiology this month?" "Which ACOs are most aligned with our value-based care strategy?"

Just ask. Get answers. Move forward.

Top tip

The insight: Healthcare rarely has people who are both domain experts AND data engineers. That gap creates all the coordination overhead. Falcon bridges that gap—it's the data engineer with healthcare expertise that every operations team wishes they had.

The Claude Cowork Parallel

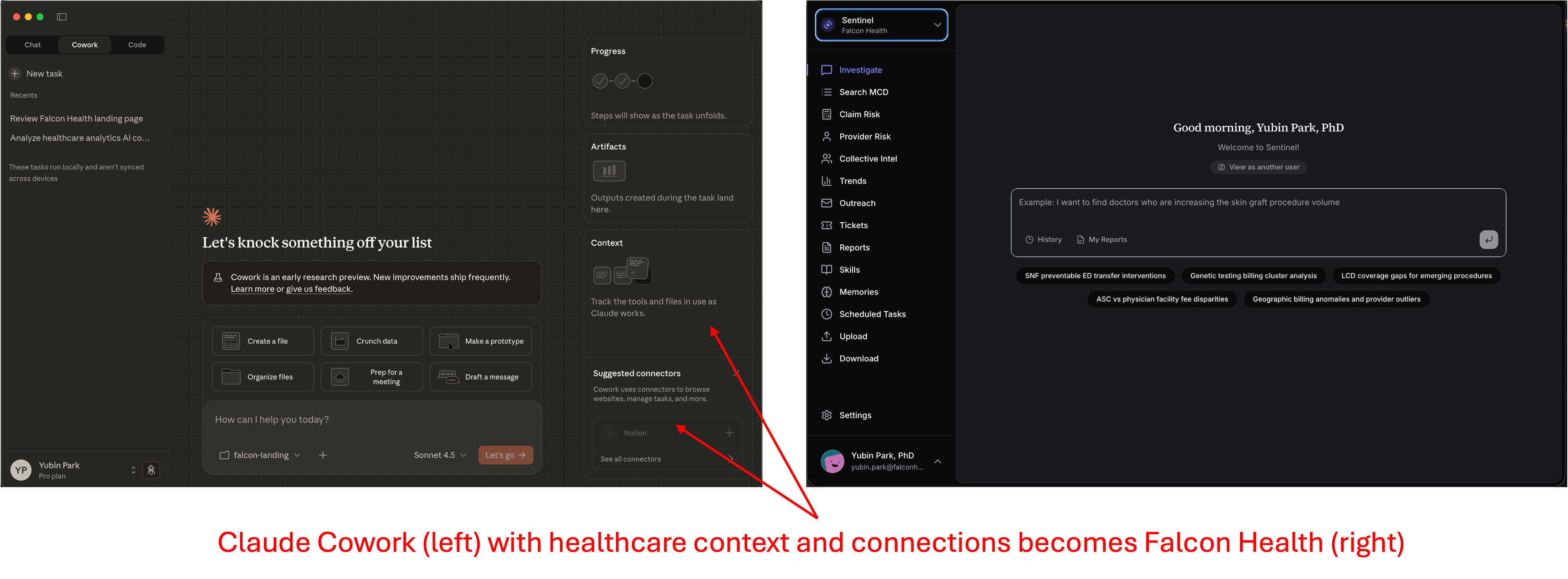

If you've seen Anthropic's recent Claude Cowork launch, you'll recognize what we're building.

Claude Cowork is "Claude Code for non-technical knowledge workers." Instead of writing code, you ask questions, build reports, and automate workflows in natural language.

That's exactly our philosophy, but for healthcare operations.

Not for email and documents—for claims data, provider networks, and Medicare reconciliation.

Claude Cowork with healthcare context and connections becomes Falcon Health. The interface philosophy is the same—natural language, no coding required. The difference is everything underneath.

Why Healthcare Context Isn't Trivial to Add

Here's what people underestimate: adding healthcare context to AI isn't just about feeding it CMS documentation or Medicare manuals.

It's about understanding:

- How data actually flows through healthcare operations (not how it's supposed to flow)

- Which questions matter in real investigations (not just what's theoretically possible)

- What "good" looks like in fraud detection, network optimization, and risk adjustment (learned from practice, not policy)

- How decisions get made with imperfect data under regulatory pressure

This isn't prompt engineering. It's not RAG over PDF files. It's encoding operational expertise that comes from:

- Working at CMS and understanding how policies get interpreted in practice

- Building healthcare companies and seeing where data breaks down

- Holding PhDs in AI and knowing which techniques actually work at scale

- Most importantly: watching thousands of real healthcare operations workflows and learning what matters

We believe we're uniquely positioned to build this because our team has lived all these experiences. We've been the regulators, the operators, the data scientists, and the AI researchers. That combination is rare, and it's not something you can replicate by hiring a healthcare consultant to write prompts.

Top tip

Why this matters: The difference between "AI with healthcare RAG" and "AI that understands healthcare operations" is like the difference between reading a surgery textbook and being a surgeon. You need both the knowledge AND the practiced intuition that only comes from real operational experience.

Generic AI tools are amazing for general knowledge work. But healthcare operations isn't general knowledge work. And the gap between "powerful AI" and "AI that understands healthcare operations" is wider than most people think.

The Three Phases: Where This Is Going

Think of it like Claude Cowork's evolution, but healthcare-specific:

Phase 1: Co-Analyst (Available Now)

You ask questions in plain language. The AI understands healthcare context, pulls from the right data sources (your claims data, CMS benchmarks, provider networks), and delivers presentation-ready insights. Try our solutions like Pulse, Sentinel, and Scope.

Time from question to answer: minutes, not weeks.

This solves the immediate problem: domain experts spending days coordinating with data teams to get basic analytics. Now they just ask.

Phase 2: Automated Intelligence (In Progress)

Here's where it gets interesting. After you ask the same types of questions a few times, the system learns your patterns.

"You've been checking high-risk providers every Monday. Want me to monitor that automatically and alert you when something changes?"

"You always filter by this region and these risk thresholds. Should I save that as a template?"

This phase isn't about you building tools yourself. It's about the AI noticing your repeated workflows and offering to automate them. You stay in control, but the system starts anticipating your needs.

The intelligence here isn't just in the AI model—it's in learning from behavioral patterns across users. Which fraud alerts actually catch real problems? Which dashboard layouts do people actually use? Which analyses lead to decisions versus which get ignored?

That's the moat we're building: operational intelligence learned from how healthcare operations teams actually work.

Phase 3: Autonomous Intelligence

Eventually, you don't see a platform at all. The intelligence layer just runs.

Monday morning, you get a summary of network performance changes that matter. A compliance alert fires when billing patterns shift in ways that historically indicated problems. Market intelligence about competitor ACO strategies arrives when you're preparing for strategic planning.

Insights surface before you ask for them. Not because the AI is making decisions for you, but because it's learned what information you need, when you need it, and how you want to see it.

This is the end state: healthcare operations with an intelligence layer that operates at the speed of the business, not the speed of coordination overhead.

Building on Foundation Models, Not Competing With Them

You might be wondering: "Can't OpenAI or Anthropic just add healthcare knowledge?"

Here's the thing: we're using their models. Transparently. Anthropic's Claude powers much of Falcon's intelligence. We're not competing with foundation models—we're building the healthcare operations layer on top of them.

The gap isn't about better AI technology. It's about thousands of hours of healthcare operations workflow data that foundation model companies can't easily replicate. They can train on CMS documentation, but they're not learning:

- How network directors actually investigate provider performance (question → follow-up → decision patterns)

- Which fraud detection approaches work in practice versus which look good on paper

- What "good" looks like in RADV prep (not just what the regulations say)

- The behavioral intelligence from real compliance investigations

That's our moat: operational intelligence learned from how healthcare operations teams actually work. Every question asked, every report generated, every alert reviewed feeds back into making the system smarter for everyone.

The entry point is simple: Ask a question in plain language.

Where it goes is much bigger: The autonomous intelligence layer for healthcare operations.

That's the future we're building. One question at a time.

Questions about where we're heading? We're always happy to talk. Reach out anytime.